Approved for Public Release; Distribution Unlimited. Public Release Case Number 21-3587

©2021 The MITRE Corporation

All rights reserved

Abstract

Artificial Intelligence (AI) has become increasingly prevalent in the public imagination, and as a result, a variety of commercial enterprises have begun marketing products as “AI-enabled.” However, we see that “AI” has become a marketing buzzword, and not every product claiming to be AI-enabled actually uses AI in a meaningful way. Since AI is such a broad area of study and because vendors often hesitate to share details on underlying algorithms, it is often difficult to determine the effectiveness of these implementations.

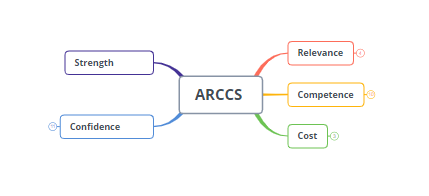

This document proposes the AI Relevance Competence Cost Score (ARCCS) framework, an evaluation methodology and metrics to assess the degree and effectiveness of the AI component of a commercially offered, AI-enabled tool. The framework guides the assessor to organize the available evidence, evaluate its strength, and determine whether a product performs as advertised in a technically relevant manner. Additionally, ARCCS serves as a guide for further investigation once such a determination is made.

ARCCS provides a method for any organization considering the acquisition of AI-enabled tools to make more informed, rigorous, and consistent assessments and final decisions.

- MITRE ARCCS Technical report(pdf) - This MITRE Technical Report (MTR) documents the ARCCS Framework and includes all necessary instructions and scoring guidelines.

- MITRE ARCCS Template(Excel spreadsheet) - This spreadsheet contains the scoring questions and scoring calculations. After reading the MTR, use this to conduct an assessment.

- Web-based MITRE ARCCS Tool - This is a web-based implementation of the ARCCS framework (same as spreadsheet). Runs in your browser.

- ARCCS Presentation at 2021 AI Summit, New York City, Dec 8-9.

Please send any comments or questions to: arccs@mitre.org